The Unofficial KubeCon EU '26 SRE Track

6 talks to add to your schedule

Alerting is often treated as an operational afterthought. It’s configured late, adjusted manually, and slowly drifts out of sync with the systems it’s meant to protect. That approach might survive in relatively static environments. It breaks down quickly in AI systems.

At Mistral AI, production is always evolving. Models are added, updated, and deprecated. Capabilities differ between versions. Correctness matters as much as availability, and failures aren’t always deterministic. In that environment, traditional alerting just doesn’t cut and introduces risk.

Checks go missing. Routing becomes inconsistent. Pager noise increases even as blind spots appear.

As explained by Mistral AI Senior SRE Devon Mizelle in a recent webinar, the solution wasn’t to add more dashboards or tune thresholds. It was to make alerting deterministic. Terraform became the source of truth: not only for infrastructure, but for what should be monitored, how alerts are grouped, and who owns them.

This post walks through how that approach works in practice, and why treating alerting as code became non-negotiable.

AI systems introduce failure modes that traditional alerting models don’t handle well: models evolve independently of application code, capabilities vary across versions, non-deterministic behavior makes correctness harder to validate than simple uptime.

In practice, alerting systems fail in three ways.

None of these problems are unique to AI. What changes is the pace. When production surfaces evolve daily, alerting that depends on humans remembering to configure things will always lag behind.

The core decision was simple: if alerting matters, it needs the same guarantees as infrastructure.

That means version control, reviewability, reproducibility, and the ability to reason about system behavior from code alone. Terraform became the source of truth not just for provisioning resources, but for expressing alerting invariants:

Instead of describing alerting in terms of UI configuration, it’s described as desired state. Terraform evaluates live system inputs, generates the required checks, and wires them into routing and escalation logic. If something exists in production, it is either monitored by definition — or Terraform fails.

“If you can’t recreate your stack as infrastructure as code, then it’s kind of not infrastructure as code.” – Devon Mizelle, Senior SRE at Mistral AI

This eliminates an entire class of failure. Missing monitors stop being human mistakes and become configuration errors. Routing changes are reviewed like any other infrastructure change. Rolling back alerting behavior is as straightforward as reverting a commit.

Just as importantly, alerting becomes predictable. Engineers can look at the code and understand what will trigger an alert, who it will page, and why, without clicking through dashboards or relying on tribal knowledge.

This also makes alerting a safe target for automation, including AI-assisted changes, because the constraints are explicit and reviewable.

One of the hardest problems in alerting isn’t how to monitor something: it’s knowing what exists.

In a traditional service, the surface area is relatively static: endpoints, queues, jobs. In an AI platform, that assumption doesn’t hold. Models come and go. Capabilities differ. Some are experimental, some are internal, some are user-facing.

Hardcoding monitors in Terraform doesn’t solve this. It just moves the drift elsewhere.

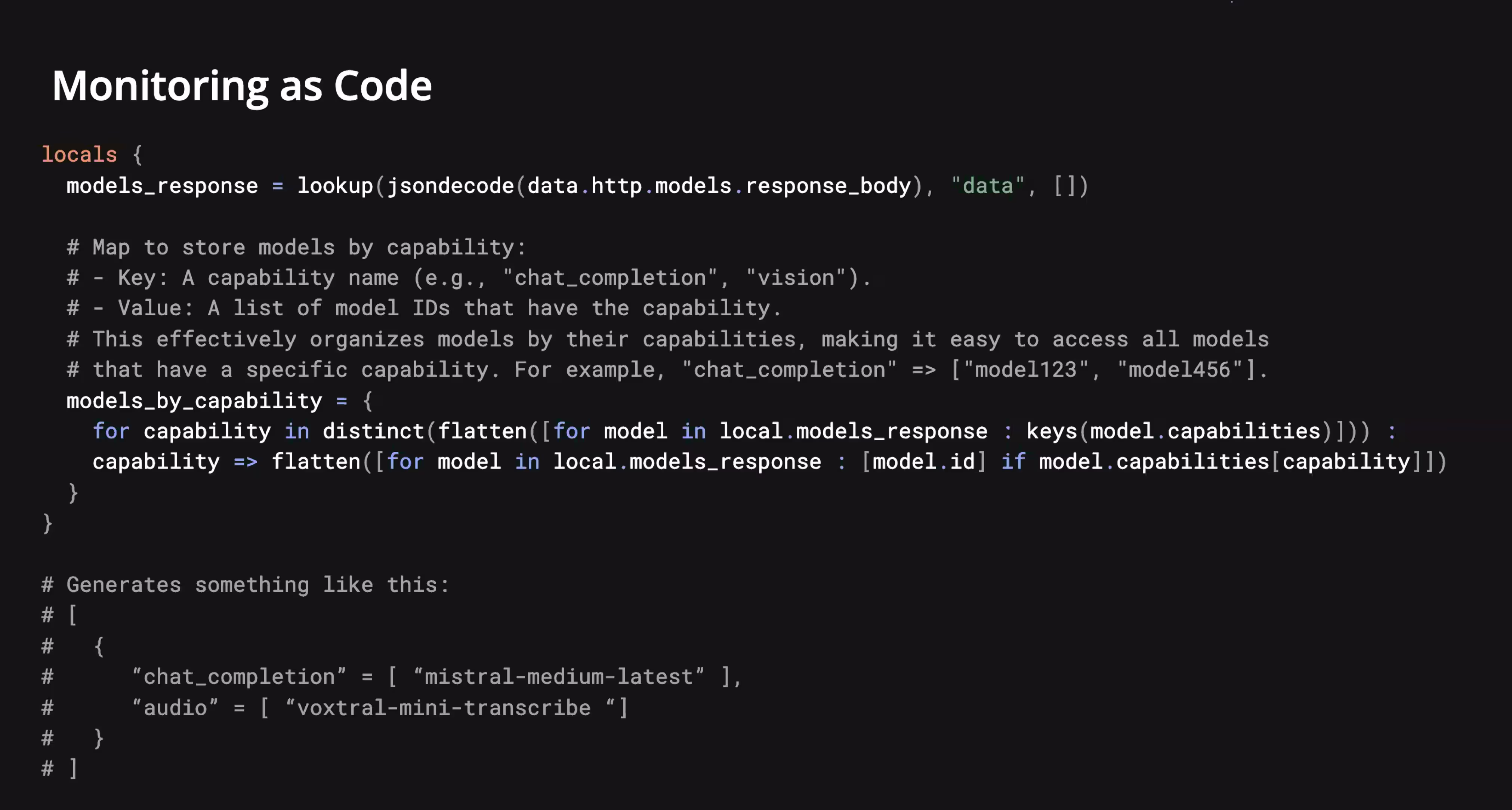

Instead, alerting configuration starts by querying live system state. Terraform fetches the list of available models directly from the API and parses their declared capabilities. Rather than reasoning about individual models, the system reasons about capabilities: chat completion, audio transcription, vision, and so on.

There’s no room for accidental divergence where one model pages after two failures and another after five, or where one validates correctness and another only checks availability. If the capability is public, the monitoring contract is identical.

“When Terraform runs, it picks up what models are available and configures all the synthetic checks consistently.”

Adding a new model doesn’t require copying an existing check or remembering which dashboard to update. Terraform evaluates the new state and generates the required monitor automatically.

Removal is symmetrical. When a model is no longer advertised, Terraform removes its corresponding check. Stale monitors don’t accumulate.

At this point, alerting stops being something engineers maintain and becomes something they derive.

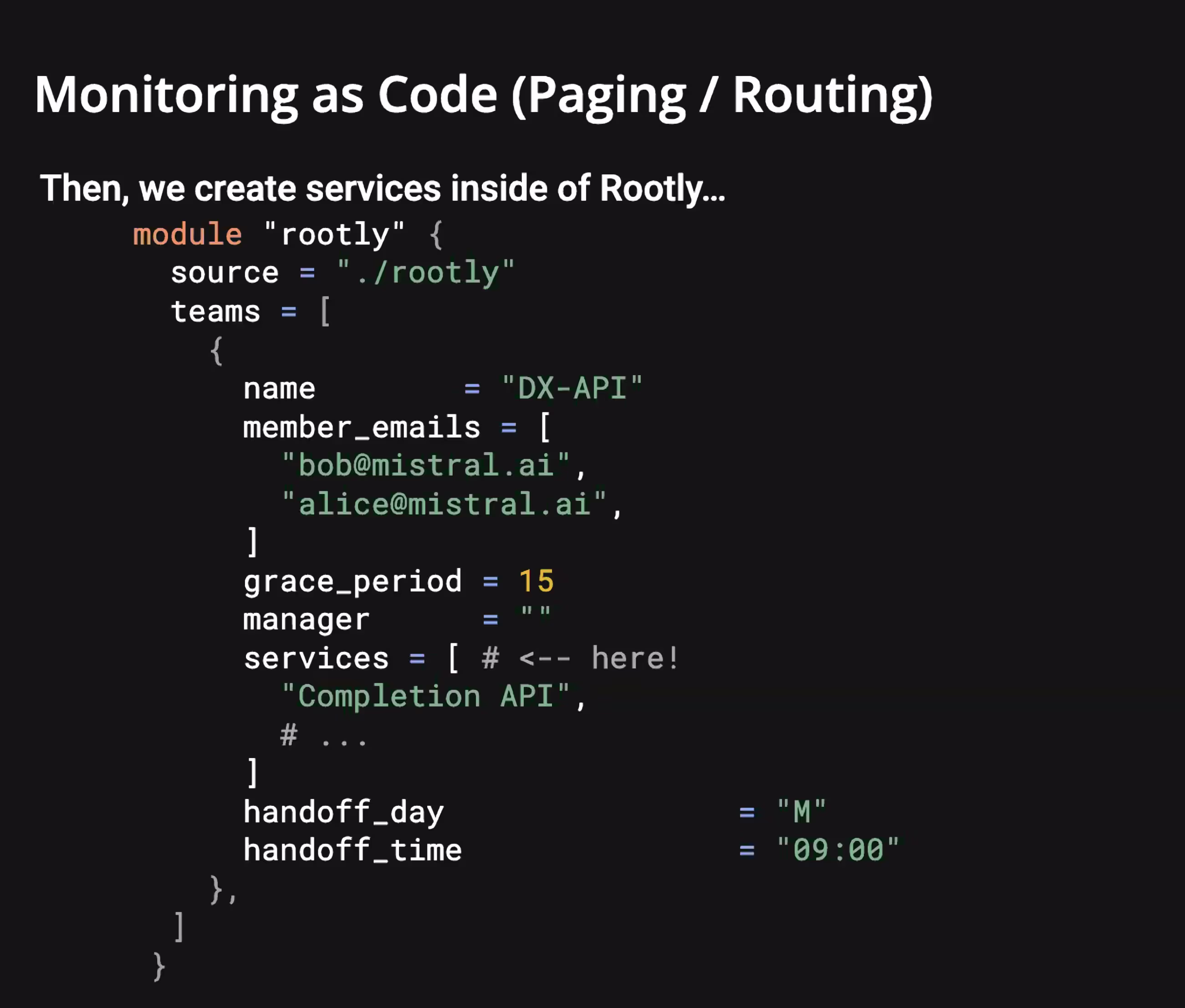

Once monitors are generated automatically, the next problem is ownership. A failing check is only useful if it reliably reaches the right team, without embedding team knowledge into every check.

At Mistral AI, tags are treated as a contract, not metadata.

Each synthetic check is tagged based on what it represents, not who owns it. A check validating chat completions carries a completion_api tag. It doesn’t know which team is on call or how escalation works.

Detection describes signal. Routing describes responsibility.

Routing logic consumes those tags and maps them to services. Services represent ownership boundaries with associated escalation policies. Changing ownership doesn’t require touching checks. Adding a new capability doesn’t require modifying routing logic, as long as the tag exists, the system already knows how to handle it.

This keeps detection stable while ownership evolves, which is exactly what you want in a growing organization.

Routing is where many alerting systems quietly collapse. Rules accumulate. Exceptions pile up. Eventually, no one is entirely sure why a given alert pages a specific team.

Defining routing entirely in Terraform avoids that failure mode.

Routing rules map tags to services, and services define escalation. When an alert arrives, routing is deterministic:

There’s no UI-only state and no ambiguity. If routing changes, it’s reviewed. If something goes wrong, it can be rolled back.

This also enables controlled alert grouping. Alerts related to the same service roll up into a single incident. Unrelated failures remain separate, even if they occur at the same time. Noise is reduced without hiding signal.

Noise reduction is often handled reactively: suppress alerts, add delays, widen thresholds. That usually trades fatigue for blind spots.

Here, noise reduction is structural.

Because checks are consistent and routing is service-based, related failures naturally collapse into a single incident. Multiple failing checks reinforce the same signal instead of creating multiple pages.

Exceptions are handled explicitly. Experimental or internal-only models can be excluded from paging without affecting the rest of the system. The rule is simple: if something is publicly advertised, it must be monitored and paged on. If it isn’t, it shouldn’t wake anyone up.

The result is fewer alerts, but stronger signal. Pages reflect user impact, not internal churn.

Once alerting is fully expressed as code, another door opens: delegation.

Because checks, routing, and ownership are defined declaratively in Terraform, changing alerting no longer requires deep, implicit knowledge of the system. The constraints are encoded. The rules are explicit. That makes alerting a viable target for AI-assisted changes.

At Mistral, engineers use Mistral Vibe, their AI coding agent, to handle observability tasks such as adding teams, updating alerting rules, or extending monitoring coverage. Engineers describe the intent; Vibe generates the corresponding Terraform changes.

This only works because Terraform is the source of truth.

The agent isn’t inventing behavior. It operates within well-defined abstractions: modules, services, tags, and invariants. The output is reviewed as code, validated by CI, and merged like any other infrastructure change.

“As long as you give the coding agent proper documentation and abstractions, it can figure out how to perform these tasks.”

From an SRE perspective, that distinction matters. AI isn’t deciding what should page or who should own it. Those decisions are encoded ahead of time. The agent handles the mechanical work of applying them consistently.

Alerting changes become faster, but not riskier, because the guardrails already exist.

The most noticeable impact of this approach isn’t just fewer alerts, it’s less thinking required to work with the system at all.

For developers, adding a new model or capability doesn’t require remembering to configure monitoring as a separate step. If the model is exposed, Terraform ensures the rest follows automatically.

For SREs, the alerting surface becomes legible again. There are fewer tools, fewer hidden states, and fewer edge cases to keep in working memory. Alerting behavior can be understood by reading code, not by clicking through dashboards.

During incidents, that predictability matters. Cognitive load is lowest when the system behaves consistently and explanations are obvious. Alerting as code moves complexity out of the incident and into design time — where it belongs.

Once alerting is deterministic and derived from system state, higher-order automation becomes possible.

AI-assisted changes to alerting configuration become safer because the blast radius is explicit and reviewable. Incident tooling can rely on consistent tags and services to gather context automatically. Even remediation workflows become easier to reason about when alerting behavior is explicit.

Those are follow-on benefits. The foundation is simpler: alerting should be code, and code should reflect reality.