Re-thinking candidate take-homes in the AI Era: transcripts over code

When everyone can ship clean code with AI, the transcript of how they got there becomes a key signal.

Over the past year, more than 46 companies started offering what they call an “AI SRE.”

The name is enticing, sure. But it’s also confusing. Most of these vendors say they’ll replace your “most experienced SRE” with AI. That really makes you wonder if they’ve ever had a knowledgeable SRE before them, but that’s beyond the point.

As the old adage says, naming is hard. And “AI SRE” is how the industry has decided to name solutions that correlate logs, metrics, and traces to automate root cause analysis and recommend (and even execute) actions to resolve incidents, when possible.

This concept is not new. Our very own Head of Rootly AI Labs, Sylvain Kalache, has held a patent on self-healing systems for over 10 years, from the time he worked as an SRE at LinkedIn (who uses Rootly for incident management).

I’ve been heads down into the AI SRE space since the concept started circling around. In this article, I want to break down everything I’ve found (capabilities, under-the-hood mechanics, adoption patterns, etc). I’ll keep updating this article as my understanding evolves and as we learn more about AI SRE.

We’ve lived through traditional SRE workflows for decades, we know how it works. You check your dashboards, you jump when you get alerts, and your start formulating hypothesis on what could’ve gone wrong.

Incident response has historically been human-driven and reactive, requiring engineers to triage alerts, reconstruct context, escalate across teams, and mitigate under time pressure. AI SRE introduces assistance into that loop, transforming the reliability pipeline from manual investigation to predictive and assisted remediation.

Other concepts to contrast AI SRE with:

In my opinion, there are two converging forces that made AI SREs emerge as a force now:

Modern production infrastructure has outpaced the cognitive and operational bandwidth of human-only SRE models. Multi-cloud, serverless, edge compute, ephemeral environments, and microservices introduced a combinatorial surface of failure that cannot be reasoned about through dashboards and Slack alone. The result is an industry-wide reliability bottleneck that shows up in metrics, headcount, cost, morale, and customer experience.

Applications decomposed into hundreds of microservices, queues, proxies, and data stores. SLIs and SLOs became distributed across teams and failure domains, requiring responders to reconstruct causal graphs under pressure. Latency amplification, cascading failures, and circuit-breaker flapping became harder to diagnose without machine correlation across logs, metrics, traces, and deployment metadata.

Industry signal: Fortune 500 cloud-native teams report thousands of components per environment, with incident bridges now spanning 6–20+ functional owners during SEV0–1 events.

Even mature orgs drown in noisy alerts. Golden signals like latency, errors, saturation, and traffic trigger faster than humans can triage. Repetitive playbooks, context switching, and 2 a.m. wake-ups contribute to burnout and responder churn.

Known pattern: Google’s SRE books and multiple surveys attribute rising on-call burnout to alert noise, context fragmentation, and operational toil.

Incident resolution depends on tribal knowledge encoded in Slack, tickets, runbooks, code comments, and past postmortems. The engineer with the answer may not be in the bridge, or may be asleep due to time zone rotation. Crisis memory also decays — past SEVs become difficult to learn from without structured extraction.

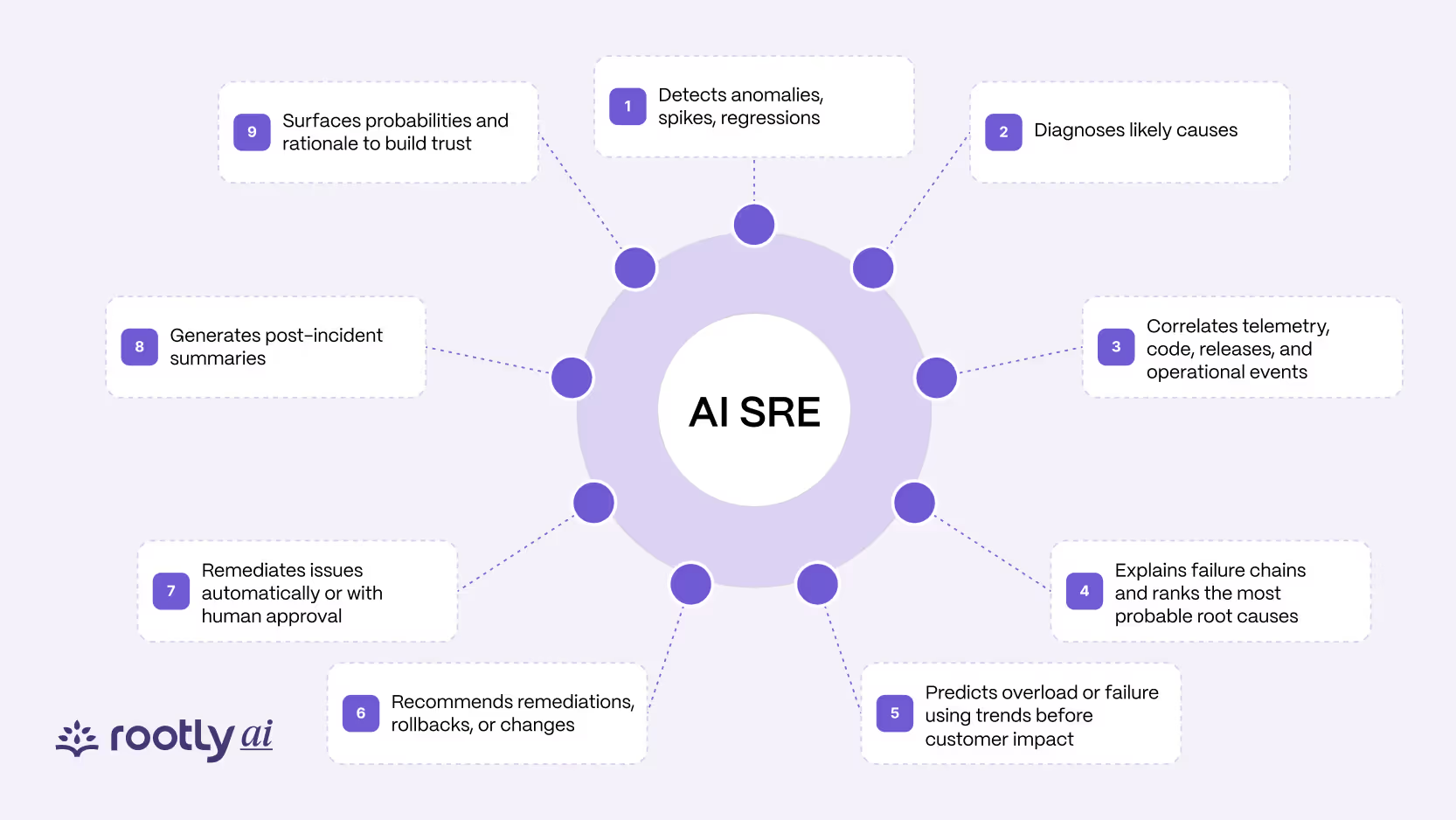

AI SRE platforms expand what it means to operate production by executing capabilities that are traditionally spread across many tools and humans.

The core capability surface includes:

This topology creates a reliability feedback loop that turns raw telemetry into operational outcomes rather than dashboards.

LLMs accelerated AI SRE adoption by making the incident domain legible to machines. They perform tasks that historically required senior SREs with deep system context:

“Payment latency likely caused by Catalog deploy at 14:03 UTC (confidence 0.74).”

For SRE practitioners, this eliminates the slowest part of incident handling: context assembly.

Modern AI SRE systems typically combine:

These elements lets AI answer questions that previously required tribal knowledge, such as:

“Have we seen this before?”

“What changed recently?”

“What is the root cause?”

“What should we do?”

Production environments still require human authority for critical actions. AI SRE introduces graded autonomy rather than a binary on/off automation model. We see four maturity stages in the field:

Maturity is driven by:

Enterprises rarely jump directly to autonomous remediation; they progress through trust-building cycles during SEV handling.

As GitHub Staff SWE Sean Goedecke says, when you get paged in the middle of the night, you are far from your peak performance. You are more of a “tired, confused, and vaguely panicky engineer.” The 2:47 AM Test illustrates when AI SRE delivers disproportionate value — during high-stakes, low-context incidents where customer impact compounds quickly and cognitive load is high.

It is 2:47 AM on a weekday. An e-commerce platform begins showing intermittent checkout failures. Dashboards indicate elevated error rates in the payment service, but the causal chain is unclear. The on-call engineer, already focused on a separate database issue, is paged and must reconstruct context under time pressure.

In a human-only workflow, the responder must manually assemble context across multiple systems:

In parallel, noisy alerts and false positives expand the decision surface. The responder must not only find the real failure but also suppress misleading signals emitted from unrelated components. This increases cognitive load and elongates the investigative window.

The majority of this work is operational toil, and its reduction is limited by how quickly a human can gather, correlate, and interpret clues from distributed systems. This toil reduction problem compounds as microservices and ownership boundaries multiply.

This investigation loop often consumes 30–60 minutes of Mean-Time-to-Detect (MTTD) before mitigation begins. During that window:

Meanwhile, the interruption cost of waking an engineer at 2:47 AM introduces fatigue, context switching, and degraded judgment.

Once detection is complete, another manual escalation chain begins to reduce Mean-Time-to-Recovery (MTTR), often involving multiple teams and domain experts.

An AI SRE platform initiates parallel investigations immediately:

Because the platform suppresses false positives through correlation, responders only see reliability-relevant signals.

Within minutes, the platform infers that:

It then generates an incident narrative with:

Because the platform performs the investigative portion automatically, responders engage only for approvals and business trade-offs, with substantial toil reduction and reduced cognitive load.

The 2:47 AM Test consistently demonstrates measurable reliability outcomes:

Some vendors refer to the automated remediation interval as MTTR-A (Automated MTTR) — where machines execute playbooks within defined guardrails.

When cognitive load is high and customer impact is rising, AI SRE collapses both MTTD and MTTR while suppressing false positives, reducing toil, and minimizing interruption cost.

This pattern emerges consistently during:

In all cases, the binding constraint is human cognitive bandwidth, not observability data.

Organizations do not move directly from zero automation to autonomous remediation. Successful adoption of AI SRE follows a staged approach that builds trust, reduces risk, and aligns automation with existing incident management norms.

Most teams progress through four maturity stages:

1. LLM-Supported (Read-Only)

The system observes incidents, correlates telemetry, and suggests actions. Humans remain fully in control.

2. LLM-Advised (Recommendation Mode)

The system proposes remediations with rationale and confidence scores. Engineers validate alignment with real-world operational behavior.

3. Semi-Autonomous (Approval Mode)

The system executes actions after human approval. Reversible, low-risk actions become automated.

4. Autonomous (Guardrailed Execution)

The system executes bounded actions automatically within defined guardrails. Higher-risk systems continue to require approval.

Enterprises rarely skip stages; the curve is governed by trust, observability quality, and risk posture.

Begin by allowing the platform to observe incidents, analyze patterns, and recommend actions without execution. This builds operator confidence and creates a shared frame of reference for what “good” remediation looks like.

Engineers can compare recommendations to what they would have done manually, which surfaces gaps in reasoning, runbooks, or data.

Once recommendations consistently align with operator behavior, organizations typically automate low-risk, reversible actions such as:

Higher-risk flows (payments, identity, trading, government workloads) continue to require explicit approval. Risk and reversibility, not feature completeness, determine automation priority.

Guardrails define the operational perimeter of automation. They typically include:

Guardrails maintain safety without stalling adoption.

Human adjudication is critical during early phases. Acceptance, rejection, or modification of AI-generated actions becomes feedback that tunes future behavior. Over time, the system learns organizational preferences such as:

This feedback loop shifts the system from generic reasoning to organization-specific reasoning.

AI SRE should extend the existing operational ecosystem rather than replace it. Integration points typically include:

Fitting into familiar workflows reduces cognitive friction and accelerates adoption.

Effective AI SRE depends on broad and consistent signal access, including:

Without these surfaces, the system cannot construct accurate causal graphs or propose safe remediations.

Common blockers observed across enterprises include:

These are cultural and structural issues, not technical limitations.

Not all organizations share the same tolerance for automation. For example:

The strategy should match the business domain, not a generic automation model.

Despite strong results in incident operations, AI SRE systems have constraints and require sound operational judgment. The following limitations shape adoption timelines, automation scope, and trust in the system.

AI platforms may lack full business context. A slowdown during a maintenance window may not be urgent, while a minor latency regression during peak purchasing hours may have revenue impact. Business calendars, user cohorts, SLAs/SLOs, regulatory windows, and contractual obligations are not always encoded in telemetry.

LLMs can produce hallucinated or overconfident explanations, particularly when telemetry is sparse or ambiguous. Outputs may be nondeterministic, meaning the same input can yield different reasoning paths, requiring operators to verify hypotheses before taking action.

Modern infrastructure contains edge cases, partial failures, and emergent behaviors that are difficult to model. Rare interactions may produce failures that neither traditional tools nor AI systems can immediately diagnose, especially in environments with limited observability or non-standard protocols.

Remediation without human oversight carries business and compliance risk. Critical paths (payments, trading, authentication, government workloads) typically retain approval gates until telemetry quality, guardrails, and trust mature. Organizations must consider blast radius, reversibility, rollback capability, and auditability before autonomous execution.

Enterprises require visibility into what executed, why, and under what conditions. Without audit logs, reasoning traces, and decision artifacts, automated remediation becomes difficult to govern. Regulated industries often require:

These requirements are not optional in financial, healthcare, or government environments.

Effective AI SRE relies on access to diverse telemetry surfaces:

If observability is inconsistent or siloed, diagnosis and remediation quality degrades. Upfront engineering work is required to stitch these surfaces together across tooling ecosystems.

Incident artifacts may contain sensitive customer data, infrastructure details, internal topology, or operational secrets. Organizations must consider:

Security teams often gate adoption until boundaries are clear.

Root cause analysis (RCA) accuracy and remediation recommendation quality vary by domain, system maturity, telemetry density, and incident type. RCA expectations should be calibrated against baselines such as “faster triage” rather than “infallible diagnosis.”

Inference workloads (particularly those requiring continuous streaming analysis) introduce new cost curves tied to model size, data volume, and concurrency. Platform teams must evaluate:

Cost efficiency varies significantly across vendors and architectures.

Most organizations lack large, consistently labeled incident datasets. Runbooks, tickets, chat threads, and postmortems often exist but are unstructured. This limits supervised learning and requires heavy use of embeddings, retrieval, or heuristic patterning to compensate.

Industries with strict regulatory mandates (e.g., finance, healthcare, energy, government) have constraints on automation, record retention, explainability, and human oversight. These constraints shape which portions of incident handling can be delegated to AI.

AI SRE does not replace SREs. It changes what SREs spend time on.

We observe three outcomes across teams piloting AI SRE:

To CTOs, this translates to increased engineering leverage rather than staff replacement.

Enterprises adopt AI SRE to address categories of operational pain:

Each use case aligns to a measurable metric (detection, diagnosis, remediation, or recovery).

Rolling out AI SRE is not a feature toggle. The most successful implementations follow a deliberate path:

Identify Pain Concentrations - Noisy alerts, brittle dependencies, and after hours pages deliver the fastest ROI.

Pilot in Low Risk Domains - Non critical or internal workflows allow models to build trust without business exposure.

Integrate Data Sources Early - Telemetry quality dictates model output quality.

Define Automation Policies - Human in the loop rules, approvals, and rollbacks form part of the reliability contract.

Evaluate Vendors By Capability -

Teams compare platforms by:

Buying decisions are less about hype and more about operational fit.

AI SRE success is not measured only in uptime. Leaders measure across three categories:

CTOs increasingly evaluate AI SRE as a force multiplier rather than a cost center.

The next wave of AI SRE moves from assisted remediation to closed loop infrastructure.

We anticipate several trajectories:

The long arc points toward production systems that degrade gracefully and repair themselves without paging a human.

The AI SRE is a shift in how we think about running production. By combining the pattern-recognition power of AI with the hard-earned practices of site reliability engineering, we get systems that don’t just alert on problems but can help resolve them.

Sure, the tech is still maturing. There are limitations, edge cases, and plenty of integration work ahead. But the teams that start experimenting now will be ahead of the curve when more advanced capabilities land.

Success with AI SRE doesn’t come from flipping a switch. It takes thoughtful rollout, tight integration with your workflows, feedback loops that keep improving the system, and a team that understands how to work with their new AI capabilities.

The future of reliability is intelligent, proactive, and collaborative. And the sooner you start that journey, the sooner your team can spend less time firefighting, and more time shipping great things.

Curious where to begin? Start by mapping out your biggest operational headaches and identifying the workflows where automation can create real leverage. That is where AI SRE starts to earn its place on the team. At Rootly, we help organizations do exactly that and make the rollout practical.

Ready to explore it? Book a demo.